Overview

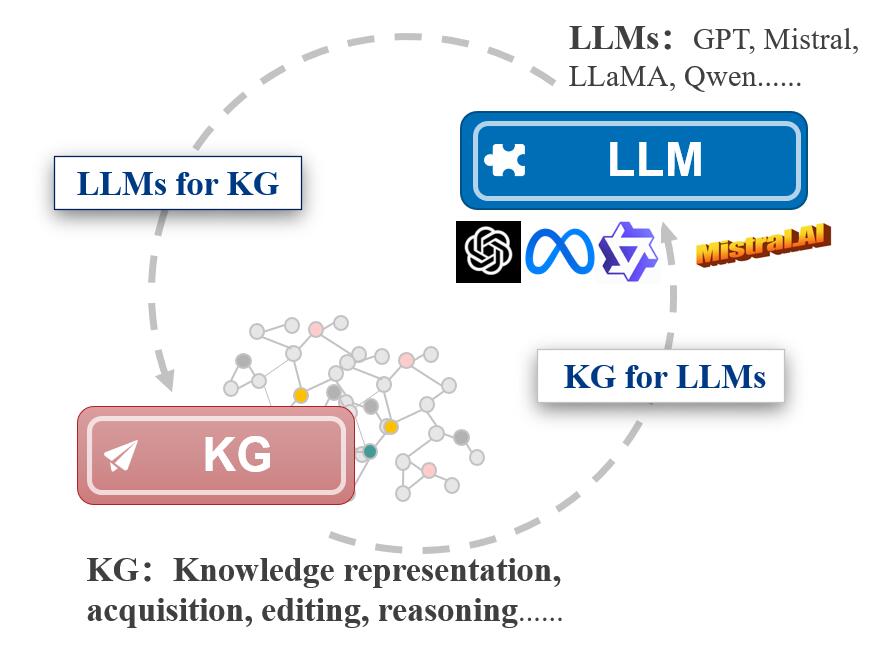

We are excited to announce the first International OpenKG Workshop on Large Knowledge-enhanced Models (LKM2024) held in conjunction with IJCAI 2024. The workshop aims to bring together researchers from academia and industry to discuss the latest advances and challenges on a variety of topics over knowledge-enhanced large language models in AI, and the integration of large models with symbolic KR such as KG.Humankind accumulates knowledge about the world in the processes of perceiving the world, with natural languages as the primary carrier of world knowledge. Representing and processing these world knowledge has been central to its objectives since the early advent of AI. Indeed, both LLMs and KGs were developed to handle world knowledge but exhibit distinct advantages and limitations. LLMs excel in language comprehension and offer expansive coverage of knowledge, but incur significant training costs and struggle with authenticity problems of logic reasoning. KGs provide highly accurate and explicit knowledge representation, enabling more controlled reasoning and being immune from hallucination problems, but face scalability challenges and struggle with reasoning transferability. A deeper integration of these two technologies promises a more holistic, reliable, and controllable approach to knowledge processing in AI.

Natural languages merely encode world knowledge through sequences of words, while human cognitive processes extend far beyond simple word sequences. Considering the intricate nature of human knowledge, we advocate for the research over Large Knowledge-enhanced Models (LKM), specifically engineered to manage diversified spectrum of knowledge structures. In this workshop, we focus on exploring large models through the lens of “knowledge”. We expect to investigate the role of symbolic knowledge such as Knowledge Graphs (KGs) in enhancing LLMs, and also interested in how LLMs can amplify traditional symbolic knowledge bases.

Call for Papers

Call for Papers

We welcome all submissions related to but not limited to the following topics:

- Large model knowledge enhancement

- Integration of LLM and symbolic KR

- Knowledge-injecting LLM pretraining

- Structure-inducing LLM pre-training

- Knowledge-augmented prompt learning

- Knowledge-enhanced instruction learning

- Graph RAG and KG RAG

- LLM-enhanced symbolic query and reasoning

- Large model knowledge extraction

- Large model knowledge editing

- Large model knowledge reasoning

- Knowledge-augmented multi-modal large models

- Multimodal learning for KGs and LLMs

- Knowledge-enhanced Hallucination Detection and Mitigation

- Semantic tools for LLMs

- Knowledgeable AI agents

- Integration of LLM and KG for world models

- Domain-specific LLMs training leveraging KGs

- Applications of combing KGs and LLMs

- Open resources combining KGs and LLMs

Submission URL: https://cmt3.research.microsoft.com/LKM2024

Format: Submissions are invited in the form of 7-page papers (with an additional 2 pages for references) for inclusion in the proceedings, or a 2-page abstract for poster and demonstration proposals. All submissions must adhere to the formatting requirements specified in the conference's author guidelines, available at https://www.ijcai.org/authors_kit. Submissions will be reviewed in a single-blind manner, and it is required to include all authors' names and affiliations. Papers that fail to adhere to the submission guidelines or fall outside the scope of the workshop's relevant topics will be desk rejected. Accepted papers will be featured in the workshop program and incorporated into the workshop proceedings, although authors may choose to opt out of this inclusion.

Dual-submission policy: We welcome ongoing and unpublished work. We also welcome papers that are under review at the time of submission, or that have been recently accepted. We welcome both accepted and rejected IJCAI 2024 papers for submission to this workshop.

Archival/Non-archival: The accepted papers may choose between two publication options: archival or non-archival. All archival papers ( excluding 2-page abstract for poster and demonstration proposals) will be recommended to a special issue in Data Intelligence Journal (https://direct.mit.edu/dint). Selected archival papers will be invited to submit extensional versions to the Elsevier Journal of Big Data Research (SCI Indexed). To qualify for archival publication, submissions must be notably original and not previously published in other venues or journals. We will have a new round of peer review for both journals, the peer review process is not much different from regular journal submissions. We will proceed with the subsequent steps as quickly as possible. The final acceptance of the journal will be determined by the outcome of new round of review. Non-archival papers, on the other hand, are permitted to be works that have been presented or published in another venue or journal.

Presentation: accepted papers can choose between an in-person presentation or opting out of an onsite presentation.

The workshop is organized by OpenKG, an open research community committed to the innovation on open technologies for KGs and their integration with modern large language models, and Data Intelligence Journal.

Important Dates:

- Submission deadline: Jun 1, 2024 AOE

- Notification to authors: Jun 7, 2024

- Camera-ready deadline: July 15, 2024 AOE

Speakers

Ying Ding

Bill & Lewis Suit Professor, University of Texas at Austin

Haofen Wang

Distinguished Research Fellow, Tongji University

Wenpeng Yin

Assistant Professor, Penn State

Yichao Zhou

Google Deepmind

Quanming Yao

Assistant Professor, Tsinghua University

Cheng Yang

Associate Professor, Beijing University of Posts and Telecommunications

Ning Xu

Assistant Professor, Southeast University

Shumin Deng

Research Fellow, National University of SingaporeSchedule

| Time | Topics | Duration | Speakers |

|---|---|---|---|

| 09:00AM - 09:10AM | Opening Remarks & Introduction of OpenKG | 10 mins | Huajun Chen |

| 09:10AM - 09:50AM | KeyNote#1 LLM and KG: where are the future of KGs in the era of LLM | 40 mins | Ying Ding |

| 09:50AM - 10:15AM | Invited Talk#1 LLMs Assist NLP Researchers: Critique Paper (Meta-)Reviewing | 25 mins | Wenpeng Yin |

| 10:15AM - 10:40AM | Invited Talk#2 Advancements in Large Language Model Summarization for Decision-Making and Knowledge Updating | 25 mins | Yichao Zhou |

| 10:40AM - 11:00AM | Coffee Break & Poster Session I | 20 mins | |

| 11:00AM - 11:25AM | Invited Talk#3 Knowledge-Aware Parsimony Learning: A Perspective from Relational Graphs | 25 mins | Quanming Yao |

| 11:25AM - 11:35AM | Spotlight#1 Technical Domain Question Answering with Large Language Models | 10 mins | |

| 11:35AM - 11:45AM | Spotlight#2 Explore then Determine: A GNN-LLM Synergy Framework for Reasoning over Knowledge Graph | 10 mins | |

| 11:45AM - 11:55AM | Spotlight#3 InLegalLLaMA: Indian Legal Knowledge Enhanced Large Language Models | 10 mins | |

| 11:55AM - 12:30AM | Panel (Ying Ding, Guilin Qi, Quanming Yao, Ning Xu, Cheng Yang) | 35 mins | Ningyu Zhang |

| Lunch Break & Poster Session II | |||

| 14:00PM - 14:40PM | KeyNote#2 Theoretical Innovations And New Research Paradigms Of Knowledge Graphs In The Era Of Large Language Models | 40 mins | Haofen Wang |

| 14:40PM - 15:05PM | Invited Talk#4 Towards Graph Foundation Models | 25 mins | Cheng Yang |

| 15:05PM - 15:30PM | Invited Talk#5 Label Enhancement Learning for Complex Semantic and Its Applications on Large Language Models | 25 mins | Ning Xu |

| 15:30PM - 16:00PM | Coffee Break & Poster Session III | 30 mins | |

| 16:00PM - 16:25PM | Invited Talk#6 KnowPhish: LLMs Meet Multimodal Knowledge Graphs for Enhancing Reference-Based Phishing Detection | 25 mins | Shumin Deng |

| 16:25PM - 16:35PM | Spotlight#4 Synergizing Large Language Model Reasoning with Talent Knowledge Graph to Facilitate Scientific Teaming | 10 mins | |

| 16:35PM - 16:45PM | Spotlight#5 Enabling Explainable Recommendation in E-commerce with LLM-powered Product Knowledge Graph | 10 mins | |

| 16:45PM - 16:55PM | Spotlight#6 Augmenting LLM based Patent Summarization with Knowledge Graphs | 10 mins | |

| 16:55PM - 17:05PM | Spotlight#7 R^3-NL2GQL: A Hybrid Models Approach for Accuracy Enhancement and Alignment Optimization | 10 mins | |

| 17:05PM - 17:15PM | Spotlight#8 Identification of LLM-Generated Text via Knowledge Potentiality | 10 mins | |

| 17:15PM - 17:20PM | Closing Remarks | 5 mins | Ningyu Zhang |

Accepted Papers

- Technical Domain Question Answering With Large Language Models,

Jihee Kim (Yonsei University); Subeen Park (Yonsei University); Hakyung Lee (Yonsei University); YongTaek Lim (Bigglz corp); Hyo-won Suh (KAIST); Kyungwoo Song (Yonsei University) - Explore then Determine: A GNN-LLM Synergy Framework for Reasoning over Knowledge Graph,

Guangyi Liu (Tsinghua University); Yongqi Zhang (HKUST (GZ)); Yong Li (Tsinghua University); Quanming Yao (Tsinghua University) - InLegalLLaMA: Indian Legal Knowledge Enhanced Large Language Models,

Sudipto Ghosh (University of Delhi); Devanshu Verma (University of Delhi); Balaji Ganesan (IBM Research)*; Purnima Bindal (University of Delhi); Vikas Kumar (University of Delhi); Vasudha Bhatnagar (Department of Computer Science, University of Delhi) - Synergizing Large Language Model Reasoning with Talent Knowledge Graph to Facilitate Scientific Teaming,

Yikun Han (University of Michigan)*; Jiawei Xu (UT Austin); Zhandos Sembay (UAB); Pamela H Foster (University of Alabama); Jake Chen (UAB); Ying Ding (University of Texas at Austin) - Enabling Explainable Recommendation in E-commerce with LLM-powered Product Knowledge Graph,

Menghan Wang (eBay)*; Yuchen Guo (eBay Inc.); Duanfeng Zhang (ebay); Jianian Jin (ebay); Minnie Li (ebay); Dan Schonfeld (eBay); Shawn Zhou (eBay) - Augmenting LLM based Patent Summarization with Knowledge Graphs,

Shoon Lei Phyu (Tokyo International University)*; Murataly Uchkempirov (Tokyo International University ); Mayesha Proma (Tokyo International University); Parag Kulkarni (Tokyo International University) - R^3-NL2GQL: A Hybrid Models Approach for Accuracy Enhancement and Alignment Optimization,

Yuhang Zhou (Fudan University)*; He Yu (fudan university); siyu tian (Fudan university); Guangnan Ye (Fudan University) - Identification of LLM-Generated Text via Knowledge Potentiality,

Zhenhua Wang (Renmin University of China); Guang Xu (Renmin University of China); Ming Ren (Renmin University of China)*

- Cognitive Mirage: A Review of Hallucinations in Large Language Models,

Hongbin Ye (Zhejiang Lab)*; Tong Liu (Zhejiang Lab); Aijia Zhang (Zhejiang Lab); Wei Hua (Zhejiang Lab); Weiqiang Jia (Zhejiang Lab) - Fast and Continual Knowledge Graph Embedding via Incremental LoRA,

Jiajun Liu (Southeast University)*; Wenjun Ke (School of Computer Science and Engineering, Southeast University); Peng Wang (Southeast University); Jiahao Wang (Southeast University); Jinhua Gao (Institute of Computing Technology, Chinese Academy of Sciences) - Efficient Tuning and Inference for Large Language Models on Textual Graphs,

Yun Zhu (Zhejiang University)*; Yaoke Wang (Zhejiang University); Haizhou Shi (Rutgers University); Siliang Tang (Zhejiang University) - Information Law-Enhanced Prompting for LLM Entity Extraction,

Zhenhua Wang (Renmin University of China); Huiru Chen (Renmin University of China); Guang Xu (Renmin University of China); Ming Ren (Renmin University of China)* - Structured knowledge injection and reasoning prompting for compliance on-chain asset analysis,

hao tan (ZJU)*; Shuangzhou Yan (Zhejiang University); Hongxin Zhang (Zhejiang University); Zhuo Li (State Street Technology (Zhejiang) Ltd.) - Knowledge Base-enhanced Multilingual Relation Extraction with Large Language Models,

Tong Chen (Xi'an Jiaotong-Liverpool University)*; Procheta Sen (University of Liverpool); Zimu Wang (Xi'an Jiaotong-Liverpool University); Zhengyong Jiang (Xi'an Jiaotong-Liverpool University); Jionglong Su (Xi'an Jiaotong-Liverpool University) - Designing a Language-Model-Based Chatbot that Considers User's Personality Profile and Emotions To Support Caregivers of People With Dementia,

Yeganeh Nasiri (Brigham Young University)*; Nancy Fulda (Brigham Young University) - Pre-trained Model Enhanced Contrastive Knowledge Graph Completion,

Lin Wang (Shanghai International Studies University); Yuan Wang (Ludong University); Wuying Liu (Ludong University)* - LLM-Driven Knowledge Enhancement for Securities Index Prediction,

Zaiyuan Di (Tongji University); Jianting Chen (Tongji university); Yunxiao Yang (Tongji University); Ling Ding (Tongji University); Yang Xiang (Tongji University)* - Improved KG-RAG to Address Complaints-LLMs Hallucinations,

Jiaju Kang (School of Computer Science and Technology, Shandong Jianzhu University)*; Kangsong Yuan (School of Computer Science and Technology, Shandong Jianzhu University); Guibing Liu (School of Computer Science and Technology, Shandong Jianzhu University) - Efficient and Accurate Memorable Conversation Model using DPO based on sLLM,

Youngkyung Seo (KT)*; Yoonseok Heo (KT); Junseok Koh (KT); Duseong Chang (KT) - SALMON: Syntactically Analysed and LLM Optimised Natural Language Text for Triple Extraction and Linking,

Muhammad Salman (The Australian National University)*; Armin Haller (ANU); Sergio José Rodríguez Méndez (The Australian National University) - An LLM-SPARQL Hybrid Framework for Named Entity Linking and Disambiguation to Wikidata,

Muhammad Salman (The Australian National University)*; Haoting Chen (The Australian National University); Sergio José Rodríguez Méndez (The Australian National University); Armin Haller (ANU) - Continual Multimodal Knowledge Graph Construction,

Xiang Chen (Zhejiang University), Jingtian Zhang (Zhejiang University), Xiaohan Wang (Zhejiang University), Ningyu Zhang (Zhejiang University), Tongtong Wu (Monash University), Yuxiang Wang (Hangzhou Dianzi University), Yongheng Wang (Zhejiang University), Huajun Chen (Zhejiang University) - Prompt-enhanced Large Language Models for Automated Construction of circRNA-themed Hyper-relational Knowledge Graph,

Yingjie Xiao (Sichuan University)*; Lei Duan (Sichuan University) - Leveraging LLM-Constructed Graphs for Effective Goal-Driven Storytelling,

Taewoo Yoo (sungkyunkwan university)*; Yun-Gyung Cheong (SKKU) - InstructEdit: Instruction-Based Knowledge Editing for Large Language Models,

Ningyu Zhang (Zhejiang University), Bozhong Tian (Zhejiang University), Siyuan Cheng (Tencent), Xiaozhuan Liang (Tencent), Yi Hu (Tencent), Kouying Xue (Tencent), Yanjie Gou (Tencent), Xi Chen (Tencent), Huajun Chen (Zhejiang University) - C2SDI: Conditional Score-based Diffusion Models with Classifier-free Guidance,

Joonseong Kang (Yonsei university)*; Seonggyun Lee (Yonsei University); JEYOON YEOM (Yonsei University); Kyungwoo Song (Yonsei University) - H-Consistency Bounded Critic for Enhancing Retrieval-Augmented Generation,

Seonggyun Lee (Yonsei University)*; Hoyoon Byun (Yonsei University); Kyungwoo Song (Yonsei University) - FactCHD: Benchmarking Fact-Conflicting Hallucination Detection,

Xiang Chen (Zhejiang University), Duanzheng Song (Zhejiang University), Honghao Gui (Zhejiang University), Chenxi Wang (Zhejiang University), Ningyu Zhang (Zhejiang University), Yong Jiang (Alibaba Group), Fei Huang (Alibaba Group), Chengfei Lv (Alibaba Group), Dan Zhang (Zhejiang University), Huajun Chen (Zhejiang University)

Organization

Workshop Organizers

Steering Committee

Huajun Chen

Zhejiang University

Guilin Qi

Southeast University

Haofen Wang

Tongji UniversityProgram Chairs

Ningyu Zhang

Zhejiang University

Tianxing Wu

Southeast University

Meng Wang

Tongji UniversityContact us

Email us at zhangningyu@zju.edu.cn

Participate

We also create a participation group for this task. You can join the participation group by scanning the QR code below with WeChat.